# 十、Redis 集群高可用

# 1. 节点与插槽管理

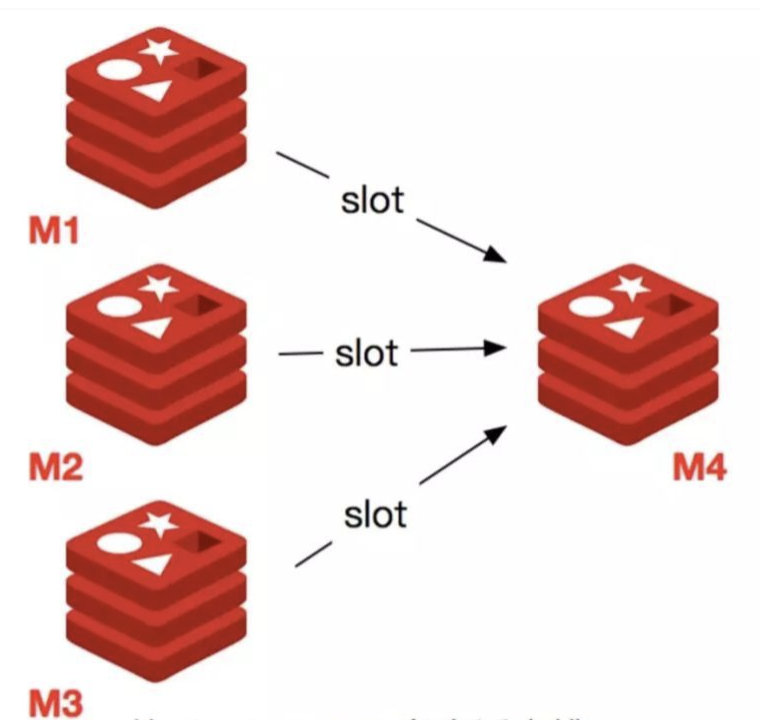

# 1.1 添加主节点

步骤

- 准备节点 M4;

- 启动节点 M4;

- 使用

add-node将节点加入到集群当中; - 使用

reshard对集群重新分配,给 M4 分配槽;

# 1.1.1 准备节点

随便在一个节点上添加如下配置文件:

vim /usr/local/redis/cluster/conf/redis-6377.conf内容:

# 放行 IP 限制 bind 0.0.0.0 # 端口 port 6377 # 后台启动 daemonize yes # 日志存储目录以及日志文件名 logfile "/usr/local/redis/cluster/log/redis-6377.log" # RDB 数据文件名 dbfilename dump-6377.rbd # AOF 模式开启和 AOF 数据文件名 appendonly yes appendfilename "appendonly-6377.aof" # RDB 数据文件和 AOF 数据文件的存储目录 dir /usr/local/redis/cluster/data # 设置密码 requirepass 123456 # 从节点访问主节点密码(必须与 requirepass 一致) masterauth 123456 # 是否开启集群模式,默认是 no cluster-enabled yes # 集群节点信息文件,会保存在 dir 配置对应目录下 cluster-config-file nodes-6377.conf # 集群节点连接超时时间,单位是毫秒 cluster-node-timeout 15000 # 集群节点 IP cluster-announce-ip 172.16.58.200 # 集群节点映射端口 cluster-announce-port 6377 # 集群节点总线端口 cluster-announce-bus-port 16377

# 1.1.2 启动节点

/usr/local/redis/bin/redis-server /usr/local/redis/cluster/conf/redis-6377.conf

# 1.1.3 将节点加入集群

语法

redis-cli -a requirepass --cluster add-node new_host:new_port exiting_host:exitsing_port --cluster-master-id node_idrequirepass:密码new_host:新节点所在 IPnew_port:新节点端口existing_host:集群中最后一个 Master 的 IPexisting_port:集群中最后一个 Master 的端口node_id:集群中最后一个节点的 ID

查看集群节点信息

redis-cli -a 123456 -h 172.16.58.200 -p 6371 cluster nodes输出:

8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372@16372 master - 0 1628560132000 7 connected 10923-16383 be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375@16375 slave 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 0 1628560132391 7 connected ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373@16373 master - 0 1628560132000 3 connected 5461-10922 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374@16374 slave 8306858f71a9a4f43c644bf4426ae2c6561b36a5 0 1628560133394 1 connected 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376@16376 slave ad98c789913e6cb9db293379509d39d8856d41b2 0 1628560134400 3 connected 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371@16371 myself,master - 0 1628560133000 1 connected 0-5460可以看到集群中最后一个节点是

8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371将 6377 加入集群中

redis-cli -a 123456 --cluster add-node 172.16.58.200:6377 172.16.58.200:6371 --cluster-master-id 8306858f71a9a4f43c644bf4426ae2c6561b36a5输出:

>>> Adding node 172.16.58.200:6377 to cluster 172.16.58.200:6371 >>> Performing Cluster Check (using node 172.16.58.200:6371) M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-5460] (5461 slots) master 1 additional replica(s) M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[10923-16383] (5461 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 172.16.58.200:6377 to make it join the cluster. [OK] New node added correctly.

# 1.1.4 重新分片

先来看当前集群状态:

redis-cli -a 123456 --cluster check 172.16.58.201:6373输出:

172.16.58.201:6373 (ad98c789...) -> 0 keys | 5462 slots | 1 slaves. 172.16.58.200:6377 (71568f93...) -> 0 keys | 0 slots | 0 slaves. 172.16.58.200:6372 (8f18a0cf...) -> 1 keys | 5461 slots | 1 slaves. 172.16.58.200:6371 (8306858f...) -> 210524 keys | 5461 slots | 1 slaves. [OK] 210525 keys in 4 masters. 12.85 keys per slot on average. >>> Performing Cluster Check (using node 172.16.58.201:6373) M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 M: 71568f93af0eb55bcd25083bb5b6b22115efaafc 172.16.58.200:6377 slots: (0 slots) master M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[10923-16383] (5461 slots) master 1 additional replica(s) M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.可以看到 6377 已经加入集群中了,但是没有分配槽给它。

添加完新节点后,需要对新添加的主节点进行 hash 槽重新分配,这样该主节点才能存储数据,Redis 共有 16384 个槽,语法如下:

redis-cli -a requirepass --cluster reshard host:port --cluster-from node_id1 --cluster-to node_id2 --cluster-slots <args> --cluster-yesrequirepass:密码host:port:随便集群中的一个 IP 和端口node_id1:表示分配的槽的来源node_id2:表示分配的槽的取向<args>:分配多少槽的范围--cluster-yes:不回显槽的分配情况,表示默认同意分配

运行:

redis-cli -a 123456 --cluster reshard 172.16.58.200:6371 --cluster-from 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 --cluster-to 71568f93af0eb55bcd25083bb5b6b22115efaafc --cluster-slots 3000 --cluster-yes再次查看集群状态:

redis-cli -a 123456 --cluster check 172.16.58.201:6373输出:

M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 M: 71568f93af0eb55bcd25083bb5b6b22115efaafc 172.16.58.200:6377 slots:[10923-13922] (3000 slots) master M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[13923-16383] (2461 slots) master 1 additional replica(s) M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2现在 6377 已经分配了 [10923-13922] 的槽了,至此,主节点就添加完毕了。

# 1.2 添加从节点

步骤

- 准备节点 S4;

- 启动节点 S4;

- 使用

add-node将节点加入到集群当中,并用--cluater-salve声明该节点为从节点,且使用--cluster-master-id指定主节点 ID;

# 1.2.1 准备节点

随便在一个节点上添加如下配置文件:

vim /usr/local/redis/cluster/conf/redis-6378.conf内容:

# 放行 IP 限制 bind 0.0.0.0 # 端口 port 6378 # 后台启动 daemonize yes # 日志存储目录以及日志文件名 logfile "/usr/local/redis/cluster/log/redis-6378.log" # RDB 数据文件名 dbfilename dump-6378.rbd # AOF 模式开启和 AOF 数据文件名 appendonly yes appendfilename "appendonly-6378.aof" # RDB 数据文件和 AOF 数据文件的存储目录 dir /usr/local/redis/cluster/data # 设置密码 requirepass 123456 # 从节点访问主节点密码(必须与 requirepass 一致) masterauth 123456 # 是否开启集群模式,默认是 no cluster-enabled yes # 集群节点信息文件,会保存在 dir 配置对应目录下 cluster-config-file nodes-6378.conf # 集群节点连接超时时间,单位是毫秒 cluster-node-timeout 15000 # 集群节点 IP cluster-announce-ip 172.16.58.201 # 集群节点映射端口 cluster-announce-port 6378 # 集群节点总线端口 cluster-announce-bus-port 16378

# 1.2.2 启动节点

/usr/local/redis/bin/redis-server /usr/local/redis/cluster/conf/redis-6378.conf

# 1.2.3 将节点加入集群

语法:

redis-cli -a reuqirepass --cluster add-node new_host:new_port exsiting_host:existing_port --cluater-salve --cluster-master-id node_idreuqirepass:密码new_host:新节点所在 IPnew_port:新节点所在端口exisiting_host:该从节点要跟的主节点的 IPexisiting_port:该从节点要跟的主节点的端口node_id:该从节点要跟的主节点的 ID

运行:

redis-cli -a 123456 --cluster add-node 172.16.58.201:6378 172.16.58.200:6377 --cluster-slave --cluster-master-id 71568f93af0eb55bcd25083bb5b6b22115efaafc输出:

>>> Adding node 172.16.58.201:6378 to cluster 172.16.58.200:6377 >>> Performing Cluster Check (using node 172.16.58.200:6377) M: 71568f93af0eb55bcd25083bb5b6b22115efaafc 172.16.58.200:6377 slots:[10923-13922] (3000 slots) master M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[13923-16383] (2461 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 172.16.58.201:6378 to make it join the cluster. Waiting for the cluster to join >>> Configure node as replica of 172.16.58.200:6377. [OK] New node added correctly.

# 1.2.4 查询集群状态

执行:

redis-cli -a 123456 --cluster check 172.16.58.201:6378输出:

172.16.58.201:6373 (ad98c789...) -> 0 keys | 5462 slots | 1 slaves. 172.16.58.200:6377 (71568f93...) -> 0 keys | 3000 slots | 1 slaves. 172.16.58.200:6372 (8f18a0cf...) -> 1 keys | 2461 slots | 1 slaves. 172.16.58.200:6371 (8306858f...) -> 210524 keys | 5461 slots | 1 slaves. [OK] 210525 keys in 4 masters. 12.85 keys per slot on average. >>> Performing Cluster Check (using node 172.16.58.201:6378) S: 35724039f2e9d7f43c349718fee303b0681e454e 172.16.58.201:6378 slots: (0 slots) slave replicates 71568f93af0eb55bcd25083bb5b6b22115efaafc M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 M: 71568f93af0eb55bcd25083bb5b6b22115efaafc 172.16.58.200:6377 slots:[10923-13922] (3000 slots) master 1 additional replica(s) S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2 M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[13923-16383] (2461 slots) master 1 additional replica(s) M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.可以看到 6377 也已经有从节点了,至此,从节点就添加完毕了。

# 1.3 删除从节点

步骤

- 使用

del-node将节点从集群中移除; - 使用 kill 或 SHUTDOWN 停止节点进程。

删除从节点就比较简单了,直接将它从集群中删除,然后杀死进程就可以了。

语法:

redis-cli -a requirepass --cluster del-node host:port node_id运行:

redis-cli -a 123456 --cluster del-node 172.16.58.201:6378 35724039f2e9d7f43c349718fee303b0681e454e输出:

>>> Removing node 35724039f2e9d7f43c349718fee303b0681e454e from cluster 172.16.58.201:6378 >>> Sending CLUSTER FORGET messages to the cluster... >>> Sending CLUSTER RESET SOFT to the deleted node.再次查看集群状态:

redis-cli -a 123456 --cluster check 172.16.58.201:6373输出:

M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 M: 71568f93af0eb55bcd25083bb5b6b22115efaafc 172.16.58.200:6377 slots:[10923-13922] (3000 slots) master M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[13923-16383] (2461 slots) master 1 additional replica(s) M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2会发现从节点已经从集群中移除了。

停掉从节点进程:

ps -ef | grep redis kill -9 PID

# 1.4 删除主节点

步骤

- 使用

reshard将要删除的主节点 M4 的槽分配给其他节点; - 使用

del-node将 M4 从集群中移除; - 停止 M4 进程。

删除主节点稍微麻烦一点,因为主节点分配了槽,所以必须先把槽分配给其他可用节点后,才可以移除节点,不然会造成数据丢失。

# 1.4.1 重新分片

将数据移动到其他主节点去,执行重新分片命令:

redis-cli -a 123456 --cluster reshard 172.16.58.200:6377出现:

How many slots do you want to move (from 1 to 16384)?前面我们给 6377 分配了 2000 个槽,这里输入 2000 即可。

出现:

What is the receiving node ID?我们需要指定这些槽的取向,这里我们分配回给 6371,输入 6371 的 ID。

出现:

Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1:这里是要指定槽从谁取,直接填写 6377 的 ID 即可。然后按回车,再输入 done。

也可以直接输入 all,从集群的所有 master 中随机取。

再输入:

yes

# 1.4.2 查看集群状态

执行:

redis-cli -a 123456 --cluster check 172.16.58.200:6371输出:

M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-6461],[10923-14372] (9912 slots) master 1 additional replica(s) M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[14373-16383] (2011 slots) master 1 additional replica(s) M: 71568f93af0eb55bcd25083bb5b6b22115efaafc 172.16.58.200:6377 slots: (0 slots) master S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[6462-10922] (4461 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2可以看到 6377 的 slots 为 0 了。

# 1.4.3 移除主节点

执行:

redis-cli -a 123456 --cluster del-node 172.16.58.200:6377 71568f93af0eb55bcd25083bb5b6b22115efaafc输出:

>>> Removing node 71568f93af0eb55bcd25083bb5b6b22115efaafc from cluster 172.16.58.200:6377 >>> Sending CLUSTER FORGET messages to the cluster... >>> Sending CLUSTER RESET SOFT to the deleted node.

# 1.4.4 检查集群状态

执行:

redis-cli -a 123456 --cluster check 172.16.58.200:6371输出:

M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-6461],[10923-14372] (9912 slots) master 1 additional replica(s) M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[14373-16383] (2011 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[6462-10922] (4461 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2至此,6377 就从集群中移除了。

# 1.4.5 停止节点进程

ps -ef | grep redis

kill -9 PID

# 2. MOVED 转向

# 2.1 MOVED 错误演示

当我们使用操作 Redis 单节点的 Client 来操作集群时,比如使用以下方式登入 Client:

redis-cli -a 123456 -h 172.16.58.202 -p 6375

然后执行:

get username

会出现 MOVED 错误:

172.16.58.202:6375> get username

(error) MOVED 14315 172.16.58.200:6371

Redis 官方规范要求所有 Clieny 都应该处理 MOVED 错误,从而实现对用户的透明。

单击模式/集群模式下 MOVED 错误的显示区别:

- 集群模式:自动转向

- 单击模式:报错,需要开发者自主做出处理

# 2.2 解决措施

# 2.2.1 使用集群模式进入 Client

redis-cli -c -a 123456 -h 172.16.58.200 -p 6371

# 2.2.2 手动切换到正确节点

(error) MOVED 14315 172.16.58.200:6371

上述信息当中有该 slot 所在的节点,可以切换到该节点进行操作。

# 3. ASK 转向

# 3.1 ASK 错误

除了 MOVED 转向,Redis 规范还要求 Client 实现对 ASK 转向的处理。

当 Client 向源节点发送一个命令,并且命令要处理的键恰好就属于正在被迁移的槽时:

- 先在自己的数据里面查找指定的键,找到,就直接响应 Client;

- 但是,这个键有可能已经被迁移到了目标节点,源节点将向 Client 返回一个 ASK 错误。

单击模式/集群模式下 ASK 错误的显示区别:

- 集群模式:自动转向

- 单击模式:报错,需要开发者自主做出处理

# 3.2 解决措施

# 3.2.1 使用集群模式进入 Client

redis-cli -c -a 123456 -h 172.16.58.200 -p 6371

# 3.2.2 使用 ASKING 命令

172.16.58.202:6375> ASKING

OK

ASKING 命令打开 Redis ASKING 标识,执行 get 命令后,该标识会被去除,每次都需要 ASKING。

MOVED vs ASK

- MOVED 错误代表槽的负责权已经从一个节点转移到了另外一个节点,是长期的状态;

- ASK 错误只是两个节点在迁移槽的过程中使用的一种临时措施。

# 4. 自动故障转移

# 4.1 环境准备

- 启动 6 个 redis 节点,参考前面文章。

# 4.2 演示

查看集群状态

M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371

slots:[0-6461],[10923-14372] (9912 slots) master

1 additional replica(s)

M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372

slots:[14373-16383] (2011 slots) master

1 additional replica(s)

S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375

slots: (0 slots) slave

replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1

M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373

slots:[6462-10922] (4461 slots) master

1 additional replica(s)

S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374

slots: (0 slots) slave

replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5

S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376

slots: (0 slots) slave

replicates ad98c789913e6cb9db293379509d39d8856d41b

下面来演示 6371 故障后,它的从节点 6374 会自动顶上。

杀死 6371:

ps -ef | grep redis kill -9 PID查看 6374 日志:

tail -f -n 1000 /usr/local/redis/cluster/log/redis-6374.log输出:

# 6374 与主节点 6371 失去联系了 98575:S 09 Aug 2021 20:03:22.255 # Connection with master lost. 98575:S 09 Aug 2021 20:03:22.255 * Caching the disconnected master state. # 重新重新连接 98575:S 09 Aug 2021 20:03:23.080 * Connecting to MASTER 172.16.58.200:6371 98575:S 09 Aug 2021 20:03:23.080 * MASTER <-> REPLICA sync started # 连接失败 98575:S 09 Aug 2021 20:03:23.080 # Error condition on socket for SYNC: Connection refused 98575:S 09 Aug 2021 20:03:40.880 * FAIL message received from ad98c789913e6cb9db293379509d39d8856d41b2 about 8306858f71a9a4f43c644bf4426ae2c6561b36a5 # 集群进入 fail 状态 98575:S 09 Aug 2021 20:03:40.880 # Cluster state changed: fail # 开始选举 98575:S 09 Aug 2021 20:03:40.882 # Start of election delayed for 611 milliseconds (rank #0, offset 15019915). 98575:S 09 Aug 2021 20:03:41.184 * Connecting to MASTER 172.16.58.200:6371 # 主从复制 98575:S 09 Aug 2021 20:03:41.184 * MASTER <-> REPLICA sync started 98575:S 09 Aug 2021 20:03:41.185 # Error condition on socket for SYNC: Connection refused # 故障转移 98575:S 09 Aug 2021 20:03:41.584 # Starting a failover election for epoch 10. 98575:S 09 Aug 2021 20:03:41.588 # Failover election won: I'm the new master. 98575:S 09 Aug 2021 20:03:41.588 # configEpoch set to 10 after successful failover # 删除之前的 master 状态 98575:M 09 Aug 2021 20:03:41.588 * Discarding previously cached master state. # 更新 replication ID 98575:M 09 Aug 2021 20:03:41.588 # Setting secondary replication ID to 145f1a07ead94288b0324a632fcb1d1c2debbba7, valid up to offset: 15019916. New replication ID is a0d6838ae362f8f0416f3c21fb1ea7b25bf32187 # 集群进入 ok 状态 98575:M 09 Aug 2021 20:03:41.588 # Cluster state changed: ok再看集群状态:

redis-cli -a 123456 --cluster check 172.16.58.200:6372输出:

M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[14373-16383] (2011 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 M: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots:[0-6461],[10923-14372] (9912 slots) master S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2 M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[6462-10922] (4461 slots) master 1 additional replica(s)尝试获取 username,之前这个字段是存在 6371 上的:

172.16.58.201:6373> get username -> Redirected to slot [14315] located at 172.16.58.201:6374 "zhangsan"可以发现 username 已经转移到 6374 上面了,自动故障转移成功!

再次启动 6371:

redis-server redis-6371.conf查看集群状态:

M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[14373-16383] (2011 slots) master 1 additional replica(s) S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 M: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots:[0-6461],[10923-14372] (9912 slots) master 1 additional replica(s) S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2 S: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots: (0 slots) slave replicates 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[6462-10922] (4461 slots) master 1 additional replica(s)可以看到 6371 已经成了 6374 的从节点了。

# 5. 手动故障转移

Redis Cluster 使用 CLUSTER FAILOVER 命令来进行故障转移,不过要在 被转移的主节点的从节点上 执行该命令。

# 5.1 故障转移

在刚刚降级为从节点的 6371 中执行

CLUSTER FAILOVER开启手动故障转移:redis-cli -c -a 123456 -h 172.16.58.200 -p 6371 cluster failover

# 5.2 查看集群状态

执行:

redis-cli -a 123456 --cluster check 172.16.58.200:6371输出:

M: 8306858f71a9a4f43c644bf4426ae2c6561b36a5 172.16.58.200:6371 slots:[0-6461],[10923-14372] (9912 slots) master 1 additional replica(s) M: ad98c789913e6cb9db293379509d39d8856d41b2 172.16.58.201:6373 slots:[6462-10922] (4461 slots) master 1 additional replica(s) M: 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 172.16.58.200:6372 slots:[14373-16383] (2011 slots) master 1 additional replica(s) S: 3c95fde7335c16c7f3fe323bde9cfc9778ca1d38 172.16.58.201:6374 slots: (0 slots) slave replicates 8306858f71a9a4f43c644bf4426ae2c6561b36a5 S: be0ddf4921a4a2f077b8c0a72504c8714c69b836 172.16.58.202:6375 slots: (0 slots) slave replicates 8f18a0cf21cc4bcc3d96c0e672edf0949abefad1 S: 7ba9475d72e3ff57b409e9ff2ee7ecbfce4a024f 172.16.58.202:6376 slots: (0 slots) slave replicates ad98c789913e6cb9db293379509d39d8856d41b2可以看到现在 6371 是 6374 的主节点了。

# 5.3 查看日志

查看 6371 日志:

tail -f -n 1000 redis-6371.log输出:

# 接受手动故障转移 33635:S 09 Aug 2021 20:15:20.816 # Manual failover user request accepted. # 准备转移 33635:S 09 Aug 2021 20:15:20.835 # Received replication offset for paused master manual failover: 15020307 # 开启手动故障转移 33635:S 09 Aug 2021 20:15:20.908 # All master replication stream processed, manual failover can start. # 选举 leader 33635:S 09 Aug 2021 20:15:20.908 # Start of election delayed for 0 milliseconds (rank #0, offset 15020307). 33635:S 09 Aug 2021 20:15:20.908 # Starting a failover election for epoch 11. # 等待投票 33635:S 09 Aug 2021 20:15:20.912 # Currently unable to failover: Waiting for votes, but majority still not reached. # 选举完毕 33635:S 09 Aug 2021 20:15:20.913 # Failover election won: I'm the new master. 33635:S 09 Aug 2021 20:15:20.913 # configEpoch set to 11 after successful failover 33635:M 09 Aug 2021 20:15:20.913 # Connection with master lost. 33635:M 09 Aug 2021 20:15:20.913 * Caching the disconnected master state. 33635:M 09 Aug 2021 20:15:20.913 * Discarding previously cached master state. 33635:M 09 Aug 2021 20:15:20.913 # Setting secondary replication ID to a0d6838ae362f8f0416f3c21fb1ea7b25bf32187, valid up to offset: 15020308. New replication ID is 213fd6cf96e35b3c470c6852357d9b438682a208 # 主从复制 33635:M 09 Aug 2021 20:15:21.851 * Replica 172.16.58.201:6374 asks for synchronization 33635:M 09 Aug 2021 20:15:21.851 * Partial resynchronization request from 172.16.58.201:6374 accepted. Sending 0 bytes of backlog starting from offset 15020308.查看 6374 日志:

tail -f -n 1000 redis-6374.log输出:

# 接受手动故障转移 98575:M 09 Aug 2021 20:15:20.608 # Manual failover requested by replica 8306858f71a9a4f43c644bf4426ae2c6561b36a5. 98575:M 09 Aug 2021 20:15:20.702 # Failover auth granted to 8306858f71a9a4f43c644bf4426ae2c6561b36a5 for epoch 11 98575:M 09 Aug 2021 20:15:20.706 # Connection with replica 172.16.58.200:6371 lost. 98575:M 09 Aug 2021 20:15:20.707 # Configuration change detected. Reconfiguring myself as a replica of 8306858f71a9a4f43c644bf4426ae2c6561b36a5 98575:S 09 Aug 2021 20:15:20.707 * Before turning into a replica, using my own master parameters to synthesize a cached master: I may be able to synchronize with the new master with just a partial transfer. 98575:S 09 Aug 2021 20:15:21.630 * Connecting to MASTER 172.16.58.200:6371 # 开始主从复制 98575:S 09 Aug 2021 20:15:21.631 * MASTER <-> REPLICA sync started 98575:S 09 Aug 2021 20:15:21.631 * Non blocking connect for SYNC fired the event. 98575:S 09 Aug 2021 20:15:21.637 * Master replied to PING, replication can continue... # 增量复制 98575:S 09 Aug 2021 20:15:21.644 * Trying a partial resynchronization (request a0d6838ae362f8f0416f3c21fb1ea7b25bf32187:15020308). 98575:S 09 Aug 2021 20:15:21.645 * Successful partial resynchronization with master. 98575:S 09 Aug 2021 20:15:21.645 # Master replication ID changed to 213fd6cf96e35b3c470c6852357d9b438682a208 98575:S 09 Aug 2021 20:15:21.645 * MASTER <-> REPLICA sync: Master accepted a Partial Resynchronization.

# 5.4 读取数据

现在再来 get username,数据应该转移到 6371 上了:

172.16.58.200:6372> get username

-> Redirected to slot [14315] located at 172.16.58.200:6371

"zhangsan

# 6. 集群迁移

# 6.1 手动迁移

源集群于目标集群结构一致

- 取消密码;

- 创建跟源集群结构一致的目标集群;

- 先从后主停掉目标集群服务;

- 删除目标集群中所有节点的 AOF 和 RDB 文件;

- 源集群数据持久化;

- 将源集群的所有节点的 AOF 和 RDB 文件复制到目标集群对应的节点上;

- 启动目标集群服务;

- 检查目标集群状态。

源集群于目标集群结构不一致

思路一:

- 先按照集群结构一致的情况进行迁移;

- 再进行集群的缩容或者扩容到之前指定的目标集群的结构。

思路二:

- 源集群和目标集群都将槽全部归到一个节点 A 上;

- 源集群对 A 进行持久化;

- 将源集群 A 节点的 AOF 和 RDB 文件复制到目标集群的 A 节点上;

- 启动目标集群;

- 目标集群进行槽的重新分配。

# 6.2 自动迁移 RedisShake

阿里开源的 Redis 自动化迁移工具。

- Github: https://github.com/alibaba/RedisShake/wiki

# 6.3 迁移检查 RedisFullCheck

阿里开源的配套工具,用于检查源和目标是否数据统一。

- Github: https://github.com/alibaba/RedisFullCheck/wiki